About MAR 2024

In this workshop, we plan to gather researchers working in neural algorithmic learning, multimodal reasoning, and cognitive models of intelligence to showcase their cutting-edge research, discuss the latest challenges, as well as bring to the forefront problems in perception and language modeling that are often overlooked but are pivotal in achieving true artificial general intelligence. An emphasis of this workshop is on the emerging topic of multimodal algorithmic reasoning, where a reasoning agent is required to automatically deduce new algorithms/procedures for solving real-world tasks, e.g., algorithms that use multimodal foundational models for analysis, synthesis, and planning, new approaches towards solving challenging vision-and-language mathematical (Olympiad type) reasoning problems, deriving winning strategies in multimodal games, procedures for using tools in robotic manipulation, etc. We hope to deep dive into this exciting topic at the intersection of multimodal learning and cognitive science to understand what we have achieved thus far in machine intelligence and what we are lacking in relation to the human way of thinking -- through talks from outstanding researchers and faculty that could inspire the audience to search for the missing rungs on the ladder to true intelligence.

A second focus of MAR 2024 is to nudge the vision community to make progress on building neural networks that have human-like intelligence abilities for abstraction, inference, and generalization. To this end, we propose the SMART-101 challenge as part of this workshop. This challenge is based on the Simple Multimodal Algorithmic Reasoning Task and the SMART-101 dataset consisting of vision-and-language algorithmic reasoning puzzles. The puzzles demonstrate the need for algorithmic reasoning on abstract visual puzzles, which we believe would be a great test bed for evaluating multimodal large language models, which is a topic of great interest and excitement in computer vision currently. Overall, we strongly believe the combination of multimodal abstraction and language reasoning combined with perspectives from cognition makes our workshop unique among other workshops at CVPR 2024.

Where

Summit 320 (main program) and Arch Exhibit Hall (poster session), Seattle Convention Center

When

8:25 AM - 12:15 PM PDT, Monday, June 17, 2024

Keynote Speakers

Petar Veličković

Google DeepMind

Tom Griffiths

Princeton University

Emilien Dupont

Google DeepMind

Lijuan Wang

Microsoft GenAI

Chelsea Finn

Stanford University

Workshop Paper Talk Scott O. Murray, Bridget Leonard

Spatial Representations in Multimodal AI Systems.

[video]

Workshop Paper Talk Dobrik G. Georgiev, Pietro Lió, Davide Buffelli

The Deep Equilibrium Algorithmic Reasoner.

[video]

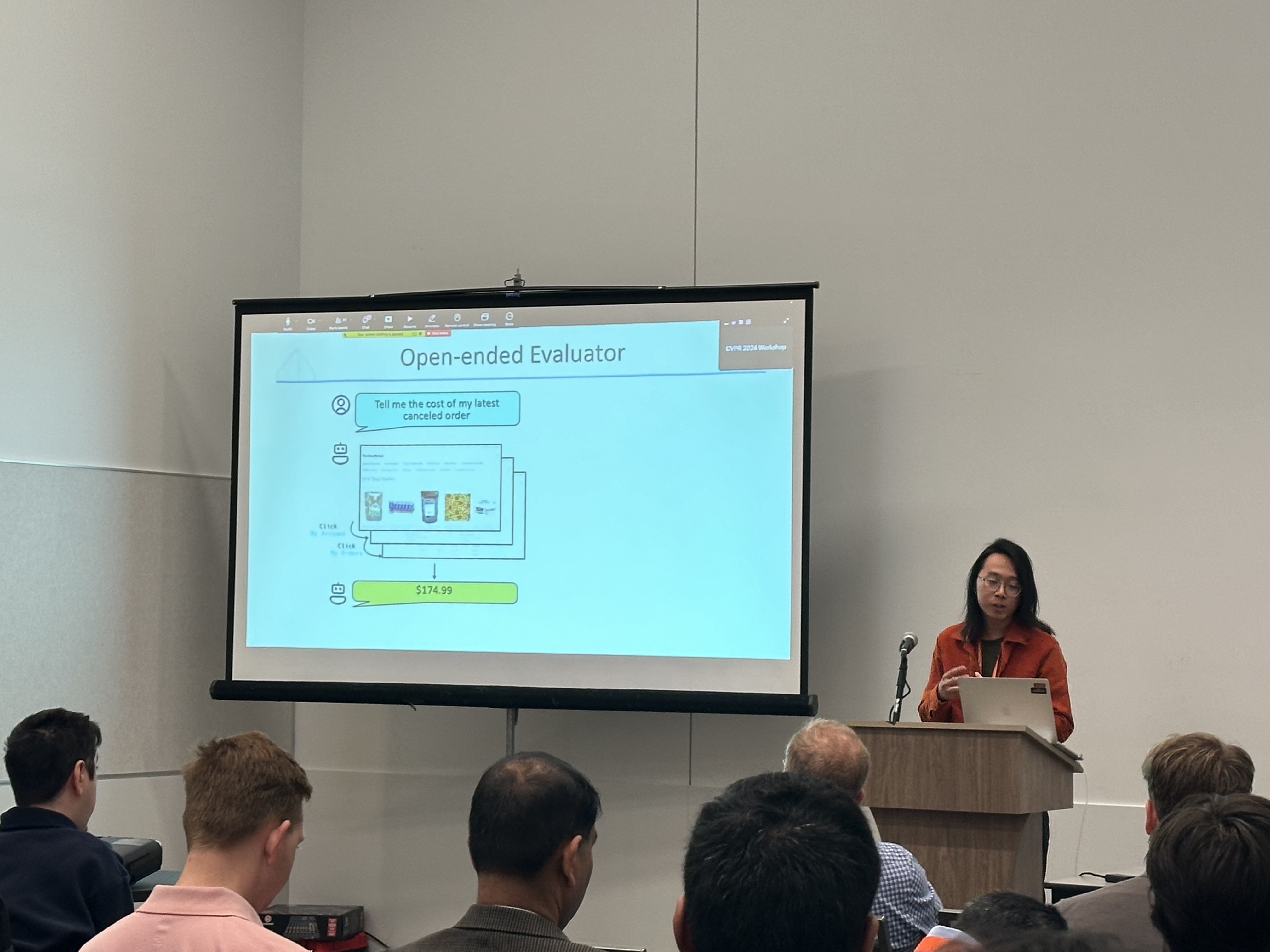

Workshop Paper Talk Jiayi Pan, Yichi Zhang, Nicholas A. Tomlin, Yifei Zhou, Sergey Levine, Alane Suhr

Autonomous Evaluation and Refinement of Digital Agents.

[video]

Coffee Break

Poster Session

Location: Arch Exhibit Hall (poster board #: 466-471)

Time: 11:45 AM - 12:15 PM

Paper Track: Submission Instructions

We welcome four sub-tracks for paper submissions:- Short papers for IEEE/CVF workshop proceedings (≤ 4 pages)

- Long papers for IEEE/CVF workshop proceedings (≤ 8 pages)

- Papers without proceedings (≤ 8 pages), and

- Previously published papers (≤ 8 pages).

Papers accepted in sub-track 3 will only be shared on the workshop website and will not have any IEEE/CVF proceedings. Authors of such papers may thus choose to re-submit these papers elsewhere. We plan to accept only a very limited number of previously accepted papers (without proceedings) in sub-track 4 if our final program schedule permits. The page limits described above are excluding the references.

The paper submissions must be in pdf format and must use the official CVPR 2024 templates. All submissions must be anonymous and conform to the CVPR standards for double-blind review. The deadline for submitting the (optional) supplementary material is the same as the main paper deadline. The (optional) supplementary material should be submitted as a separate file from the main paper. You will be able to select the type of the paper (among the four types described above) during the submission.

The accepted papers will be presented as either an oral, spotlight, or poster presentation. At least one author of each accepted submission must present the paper at the workshop. The presentation of the accepted papers at MAR 2024 will follow the same policy as that for the accepted papers of CVPR 2024.

Submission deadline (both main paper & (optional) supplementary material): March

Notification to authors: April 5, 2024.

Camera ready deadline: April 14, 2024 (11:59PM PDT).

Paper Track: Topics

The topics for MAR 2024 include, but are not limited to:- Multimodal cognition and learning.

- Foundation models of intelligence, including vision, language, and other modalities.

- Large language models, vision, and cognition including children’s cognition.

- Artificial general intelligence / general-purpose problem solving architectures.

- Neural architectures for solving vision & language or language-based IQ puzzles.

- Embodiment and AI.

- Large language models, neuroscience, and vision.

- Functional and algorithmic / procedural learning in vision.

- Abstract visual-language reasoning, e.g., using sketches, diagrams, etc.

- Perceptual reasoning and decision making.

- New vision-and-language abstract reasoning tasks and datasets.

- Vision-and-language applications.

SMART-101 Challenge Track: Participation Instructions

In the last couple of years, we have seen dramatic improvements in the reasoning abilities of multimodal and large language models. In this SMART-101 CVPR 2024 challenge, we attempt to understand these abilities of large deep models through solving visuo-linguistic puzzles that need basic mathematical and algorithmic skills; these skills even young children seem to possess and can solve the puzzles without much difficulty. A thorough empirical analysis of such abilities of multimodal LLMs is the basic premise of our CVPR 2023 paper titled Are Deep Neural Networks SMARTer than Second Graders? This paper introduces the Simple Multimodal Algorithmic Reasoning Task (SMART) and the SMART-101 dataset. Building upon the efforts in the paper, this SMART-101 CVPR-2024 challenge is an attempt at bringing research interest into this important topic to understand where we stand in the race towards achieving true Artificial General Intelligence (AGI). Specifically, the goals of this competition are three-fold, towards understanding:(i) how well do state-of-the-art multimodal LLMs abstract data, attend to key details, and generalize their knowledge to solve new problems?

(ii) how fluid are they in acquiring new skills? and

(iii) how effective are they in the use of language for visual reasoning?

Through the state-of-the-art AI models submitted by the participants of this challenge, we hope to learn and understand where we stand in real AGI abilities, and more importantly, clearly answer if the current AI is at least better than second graders in mathematical/algorithmic abilities.

The SMART challenge involves solving visuo-linguistic puzzles designed specifically for children in the 6–8 age group. The puzzles are taken from the Math Kangaroo Olympiad -- a popular international children's Olympiad that uses a multiple choice answer selection format. Most of the puzzles have an image and a text question, and five answer options of which only one option is the correct answer to the puzzle. Participant submissions to the challenge will be evaluated against a private test set. The solution to each puzzle needs a mix of various basic mathematical and algorithmic reasoning skills, involving basic arithmetic, algebra, spatial reasoning, logical reasoning, measuring, path tracing, pattern matching, and counting.

Important dates for the SMART-101 Challenge Track:

- Challenge open: March 28, 2024.

- Challenge close:

June 7, 2024 (11:59PM PDT)June 8, 2024 7:59:59 PM EST (GMT - 4:00) (the time indicated on Eval.AI). - ArXiv challenge paper deadline to be considered for awards:

June 7, 2024 (11:59PM PDT)June 10, 2024 (AOE time). - Public winner announcement: June 17, 2024.

- The challenge is hosted on Eval.AI. Please read the instructions at this link for the submission guidelines.

- The challenge participants are required to make arXiv submissions detailing their approach as well as make their implementation publicly available on Github to be considered for the prizes. Note that the participant’s arXiv submissions will not be part of the workshop proceedings.

- Winners of the challenge are determined both by the performance on the leaderboard over a private test set as well as the quality of the proposed method (as detailed in their arXiv submission and reviewed by a panel). Please see the details on the challenge website.

- Prizes will be awarded on the day of the workshop.

- The contact for the SMART-101 challenge: smart101@googlegroups.com.

Accepted Papers

The number in front of each paper is the assigned poster board number for the poster session.

Oral Papers

- [466] Spatial Representations in Multimodal AI Systems.

Scott O. Murray, Bridget Leonard. - [466] The Deep Equilibrium Algorithmic Reasoner.

Dobrik G. Georgiev, Pietro Lió, Davide Buffelli.

[arXiv] - [467] Autonomous Evaluation and Refinement of Digital Agents.

Jiayi Pan, Yichi Zhang, Nicholas A. Tomlin, Yifei Zhou, Sergey Levine, Alane Suhr.

[supplement] [arXiv]

Poster Papers

- [467] CLOVA: A Closed-Loop Visual Assistant with Tool Usage and Update.

Zhi Gao, Yuntao Du, Xintong Zhang, Xiaojian Ma, Wenjuan Han, Song-Chun Zhu, Qing Li.

[supplement] [arXiv] - [468] Using Language-Aligned Gesture Embeddings for Understanding Gestures Accompanying Math Terms.

Tristan Maidment, Purav J. Patel, Erin A. Walker, Adriana Kovashka. - [468] What does CLIP know about peeling a banana?

Claudia Cuttano, Gabriele Rosi, Gabriele Trivigno, Giuseppe Averta.

[arXiv] - [469] Transformers meet Neural Algorithmic Reasoners.

Wilfried Bounsi, Borja Ibarz, Andrew J. Dudzik, Jessica Hamrick, Larisa Markeeva, Alex Vitvitskyi, Razvan Pascanu, Petar Veličković. - [469] Task Navigator: Decomposing Complex Tasks for Multimodal Large Language Models.

Feipeng Ma, Yizhou Zhou, Yueyi Zhang, Siying Wu, Zheyu Zhang, Zilong He, Fengyun Rao, Xiaoyan Sun.

[supplement] - [470] MIRACLE: Multimodal Image-text Retrieval and Analysis for Contextual Long-form Evaluation.

Md Messal Monem Miah, Agent Chatterjee, Arindam Mitra, Huang Ruihong, Man Luo. - [470] Multi-Explainable TemporalNet: An Interpretable Multimodal Approach using Temporal Convolutional Network for User-level Depression Detection.

Anas Zafar, Danyal Aftab, Rizwan Qureshi, Yaofeng Wang, Hong Yan.

[supplement] - [471] Math-Search: A Benchmark for Multi-hop Visual Reasoning over Plots.

Pulkit Madan, Sanjay Haresh, Apratim Bhattacharyya, Litian Liu, Reza Pourreza, Sunny P Panchal, Roland Memisevic.

[supplement] - [471] ViTA: An Efficient Video-to-Text Algorithm using VLM for RAG-based Video Analysis System.

Md Adnan Arefeen, Biplob Debnath, Md Yusuf Sarwar Uddin, Srimat Chakradhar.

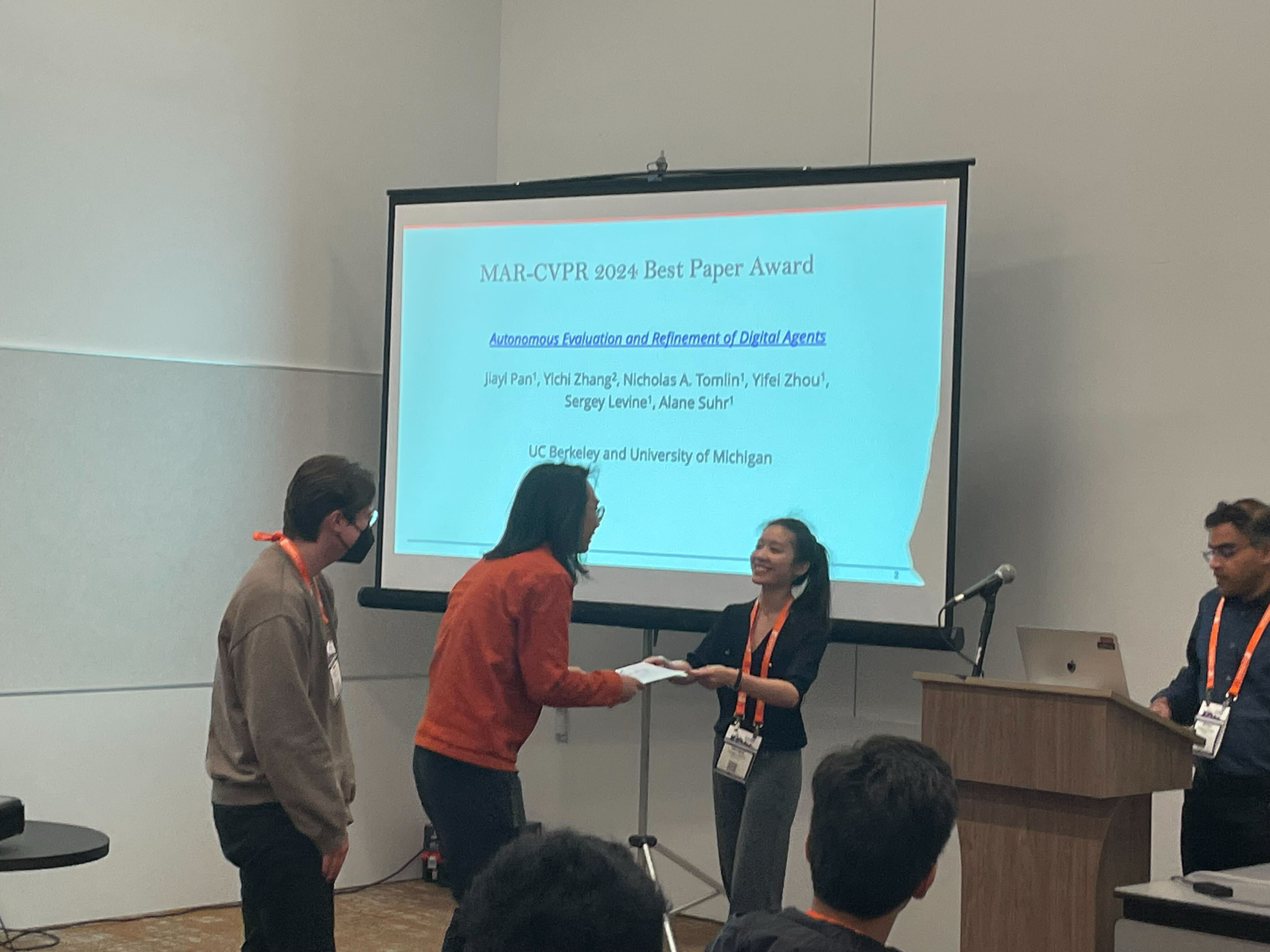

Awards

Best Paper Award

- Autonomous Evaluation and Refinement of Digital Agents.

Jiayi Pan, Yichi Zhang, Nicholas A. Tomlin, Yifei Zhou, Sergey Levine, Alane Suhr.

Challenge Runner-Up Award

- Integrating Text and Image Pre-training for Multi-modal Algorithmic Reasoning.

Zijian Zhang, Wei Liu.

Challenge Winner Award

- Solution for SMART-101 Challenge of CVPR Multi-modal Algorithmic Reasoning Task 2024.

Jinwoo Ahn, Junhyeok Park, Min-Jun Kim, Kang-Hyeon Kim, So-Yeong Sohn, Yun-Ji Lee, Du-Seong Chang, Yu-Jung Heo, Eun-Sol Kim.

Workshop Photos

MAR 2024 Venue

Seattle Convention Center

MAR 2024 will be held at the Summit 320 (main program) and Arch Exhibit Hall (poster session), Seattle Convention Center at 8:25 AM - 12:15 PM PDT on June 17, 2024.

Organizers

Anoop Cherian

Mitsubishi Electric Research Laboratories (MERL)

Kuan-Chuan Peng

Mitsubishi Electric Research Laboratories (MERL)

Suhas Lohit

Mitsubishi Electric Research Laboratories (MERL)

Honglu Zhou

Salesforce Research

Moitreya Chatterjee

Mitsubishi Electric Research Laboratories (MERL)

Kevin Smith

MIT

Tim Marks

Mitsubishi Electric Research Laboratories (MERL)

Joanna Matthiesen

Math Kangaroo USA

Program Committee

| Alessandro Salatiello | University of Tübingen |

| Anshul Shah | Apple |

| Ashish Singh | University of Massachusetts Amherst |

| Asim Kadav | Freenome |

| Can Qin | Salesforce Research |

| Deep A. Patel | NEC Laboratories America, Inc. |

| Haoqi Hu | Amazon LLC. |

| Jihoon Chung | Princeton University |

| Michael J. Jones | Mitsubishi Electric Research Laboratories |

| Sameer Khurana | Mitsubishi Electric Research Laboratories |

| Shu Zhang | Salesforce Research |

| Sourya Basu | University of Illinois at Urbana-Champaign |

| Tyler Zhu | Princeton University |

| Yizhou Wang | Northeastern University |